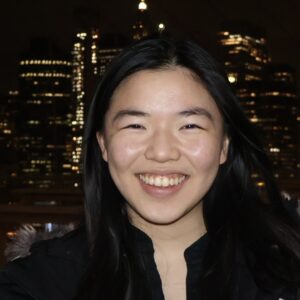

Alicia Li

MIT EECS | Nadar Foundation Undergraduate Research and Innovation Scholar

Towards Generalizable Planning with Transformers

2025–2026

Electrical Engineering and Computer Science; Mathematics

- AI and Machine Learning

- Natural Language and Speech Processing

Kim, Yoon

Large language models (LLMs) based on transformers are increasingly used for reasoning and planning tasks, yet their ability to construct robust plans for diverse search problems is not well established. Prior approaches, such as training transformers on execution traces from symbolic planners, face limitations in scalability and generalization to high-dimensional or long-horizon problems. This research investigates the theoretical capabilities and limitations of transformer-based models in solving planning and search problems. By characterizing these limits and exploring potential architectural modifications, this work aims to advance the development of AI systems capable of tackling complex, real-world decision-making tasks.

I’m participating in SuperUROP because I want to work on novel NLP architectures. Specifically, I’m interested in planning capabilities, which is inspired by my previous UROP in robot planning and learning. I’m excited to learn more about NLP architectures and hoping to publish a paper.